What is xVRM?

My original plans for virtual reality, code named VRM (virtual reality for the masses), outlined a simple virtual reality system. Featuring a homebrew magnetic tracker and a cheap projection screen, it would allow full virtual reality potential while staying flexible and inexpensive. However, not everything works as planned; the magnetic tracker turned out to be next to impossible with what I had at the time, and the screens were far from cheap. Alas, virtual reality seemed rather out of reach.

It is from these ashes that xVRM was born.

The first tenant of xVRM is that the typical ideas of what virtual reality should be are rather unreasonable. The expectation of large screens or a head mounted display that projects a perfect virtual world is rather difficult to meet; a virtual reality tracking system requires an insane degree of accuracy over a wide range, while the displays require a fairly high resolution at a large viewing size, all requirements that are currently available at a rather ridiculous price. Not to mention they’re mostly old technology that’s probably not even available anymore.

- Ascension Space Pad Tracker (“affordable”): $1500, and runs off an ISA card

- Ascension Flock of Birds Tracker: ~$25000, or something like that

- head mounted displays: $500-$10000+, and probably aren’t that healthy

- digital projector: $800-$2000+, and you’d most likely need more than one

Which, quite frankly, sucks.

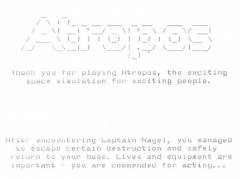

The second tenant of xVRM is that real life handles reality much better than computers. The laws of physics react instantaneously, meaning there is no lag; let them handle rendering, collision detection, sensory feedback. Leaning more towards being a simulation than a “virtual experience”, xVRM offers a chance to realize virtual worlds, now.

What do I need for xVRM?

As of writing this, xVRM doesn’t really exist in any form past an idea; however, if it were to be done, a few things would be required:

An Arena

Otherwise known as a fairly large open space. Be it outside, inside, or both, some sort of staging area is necessary for an xVRM simulation. Which kind depends on what you’re simulating.

Some Players

xVRM is generally suited toward some sort of game; instead of AI, you need real people to play with you. Unfortunately, it’s a bit harder than adding bots to a game, but fortunately, real people are trickier opponents (well, most of them).

Cool Technology

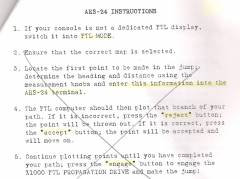

To make the simulation come alive, you need some form of simulation technology. Be it in the form of something like laser tag, some sort of wireless fencing, or a vehicle simulator, the technology brings it all together. In fact, it’s essentially the whole thing

What would an xVRM game be like?

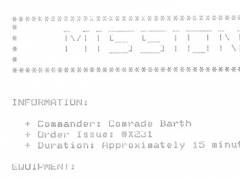

For the most part, it depends on what you’re trying to simulate. Some examples:

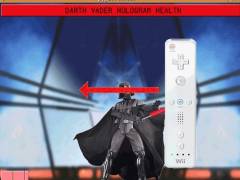

- Modern Combat: realistic laser tag weapons and rules, some sort of objective gear (bombs to be diffused?)

- Unreal Tournament: insane laser tag guns, less realistic suits, capturable flags, respawn points

- Medieval Combat: cableless fencing-like gear, perhaps some type of siege engine / base system?

- Planetary Combat: simulation capital space ships with flight hangers and miniature fighter plane simulators, mech simulators and futuristic laser tag gear for ground missions, tie in with mission support from capital ship

Ideally, they’d all use some sort of universal, cross-compatible protocol. Maximum hardware compatibility would allow for reusabliity between simulations, which would save quite a bit of development time, meaning more time to play!